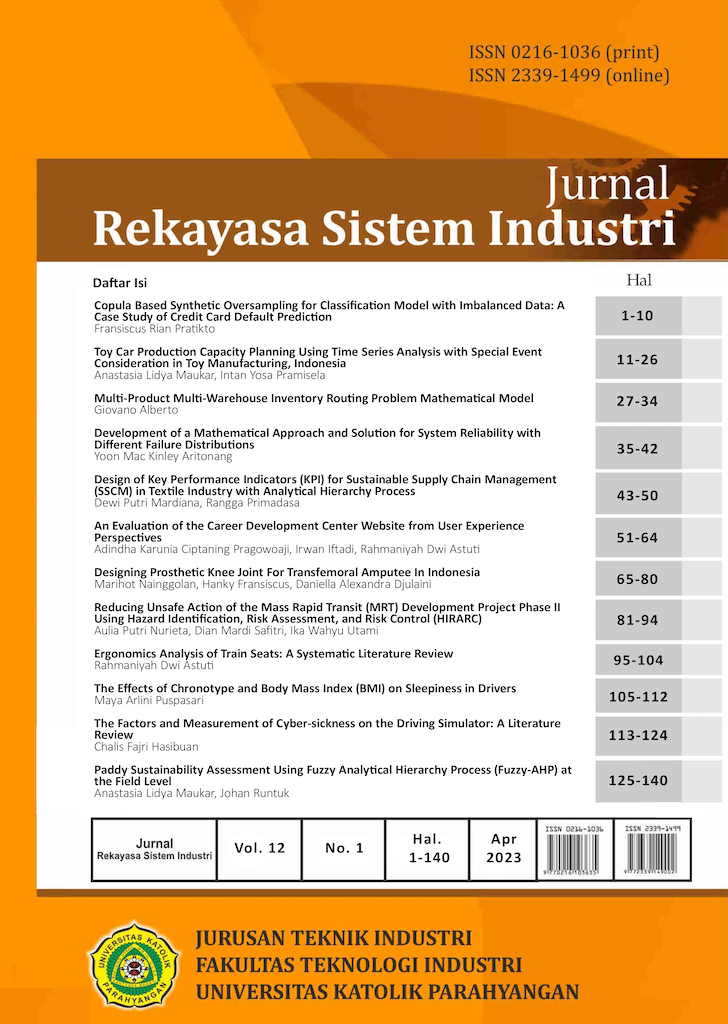

Copula Based Synthetic Oversampling for Classification Model with Imbalanced Data: A Case Study of Credit Card Default Prediction

DOI:

https://doi.org/10.26593/jrsi.v12i1.6380.1-10Keywords:

synthetic oversampling, copula, classification model, Metalog distribution, k-Nearest NeighborAbstract

A machine learning classification model for detecting abnormality is usually developed using imbalanced data where the number of abnormal instances is significantly smaller than the normal ones. Since the data is imbalanced, the learning process is dominated by normal instances, and the resulting model may be biased. The most common method for coping with this problem is synthetic oversampling. Most synthetic oversampling techniques are distance-based, usually based on the k-Nearest Neighbor method. Patterns in data can be based on distance or correlation. This research proposes a synthetic oversampling technique that is based on correlations in the form of the joint probability distribution of the data. The joint probability distribution is represented using a Gaussian copula, while the marginal distribution uses three alternatives distribution: the Pearson distribution system, empirical distribution, and the Metalog distribution system. This proposed technique is compared with several commonly used synthetic oversampling techniques in a case study of credit card default prediction. The classification model uses the k-Nearest Neighbor and is validated using the k-fold cross-validation. We found that the classification model using the proposed oversampling method with the Metalog marginal distribution has the greatest total accuracy.

References

Chawla, N. V., Bowyer, K. W., Hall, L. O., & Kegelmeyer, W. P. (2002). SMOTE: Synthetic Minority Over-sampling Technique. Journal of Artificial Intelligence Research, 16, 321–357. https://doi.org/10.1613/jair.953

Cover, T., & Hart, P. (1967). Nearest neighbor pattern classification. IEEE Transactions on Information Theory, 13(1), 21–27. https://doi.org/10.1109/TIT.1967.1053964

Delignette-Muller, M. L., & Dutang, C. (2015). fitdistrplus : An R Package for Fitting Distributions. Journal of Statistical Software, 64(4). https://doi.org/10.18637/jss.v064.i04

Durante, F., & Sempi, C. (2016). Principles of Copula Theory. Chapman and Hall/CRC. https://doi.org/10.1201/b18674

Faber, I., & Jung, J. (2021). rmetalog: The Metalog Distribution.

García, V., Sánchez, J. S., & Mollineda, R. A. (2012). On the effectiveness of preprocessing methods when dealing with different levels of class imbalance. Knowledge-Based Systems, 25(1), 13–21. https://doi.org/10.1016/j.knosys.2011.06.013

Haibo He, Yang Bai, Garcia, E. A., & Shutao Li. (2008). ADASYN: Adaptive synthetic sampling approach for imbalanced learning. 2008 IEEE International Joint Conference on Neural Networks (IEEE World Congress on Computational Intelligence), 1322–1328. https://doi.org/10.1109/IJCNN.2008.4633969

Keelin, T. W. (2016). The Metalog Distributions. Decision Analysis, 13(4), 243–277. https://doi.org/10.1287/deca.2016.0338

Menardi, G., & Torelli, N. (2014). Training and assessing classification rules with imbalanced data. Data Mining and Knowledge Discovery, 28(1), 92–122. https://doi.org/10.1007/s10618-012-0295-5

Patel, H., Singh Rajput, D., Thippa Reddy, G., Iwendi, C., Kashif Bashir, A., & Jo, O. (2020). A review on classification of imbalanced data for wireless sensor networks. International Journal of Distributed Sensor Networks, 16(4), 155014772091640. https://doi.org/10.1177/1550147720916404

Pearson, K. (1895). X. Contributions to the mathematical theory of evolution.—II. Skew variation in homogeneous material. Philosophical Transactions of the Royal Society of London. (A.), 186, 343–414. https://doi.org/10.1098/rsta.1895.0010

Ripley, B., Venables, B., Bates, D. M., Hornik, K., Gebhardt, A., & Firth, D. (2021). MASS: Support Functions and Datasets for Venables and Ripley’s MASS (7.3-54). https://cran.r-project.org/package=MASS

Rubinstein, R. Y. (Ed.). (1981). Simulation and the Monte Carlo Method. John Wiley & Sons, Inc. https://doi.org/10.1002/9780470316511

Sáez, J. A., Luengo, J., Stefanowski, J., & Herrera, F. (2015). SMOTE–IPF: Addressing the noisy and borderline examples problem in imbalanced classification by a re-sampling method with filtering. Information Sciences, 291, 184–203. https://doi.org/10.1016/j.ins.2014.08.051

Siriseriwan, W. (2019). smotefamily: a collection of oversampling techniques for class imbalance problem based on SMOTE.

Tahir, M. A., Kittler, J., & Yan, F. (2012). Inverse random under sampling for class imbalance problem and its application to multi-label classification. Pattern Recognition, 45(10), 3738–3750. https://doi.org/10.1016/j.patcog.2012.03.014

Theodoridis, S. (2015). Machine Learning: A Bayesian and Optimization Perspective (1st ed.). Elsevier.

Venables, W. N., & Ripley, B. D. (2002). Modern Applied Statistics with S. Springer New York. https://doi.org/10.1007/978-0-387-21706-2

Wang, K.-J., Makond, B., Chen, K.-H., & Wang, K.-M. (2014). A hybrid classifier combining SMOTE with PSO to estimate 5-year survivability of breast cancer patients. Applied Soft Computing, 20, 15–24. https://doi.org/10.1016/j.asoc.2013.09.014

Wong, G. Y., Leung, F. H. F., & Ling, S.-H. (2014). An under-sampling method based on fuzzy logic for large imbalanced dataset. 2014 IEEE International Conference on Fuzzy Systems (FUZZ-IEEE), 1248–1252. https://doi.org/10.1109/FUZZ-IEEE.2014.6891771

Zhang, H., & Li, M. (2014). RWO-Sampling: A random walk over-sampling approach to imbalanced data classification. Information Fusion, 20, 99–116. https://doi.org/10.1016/j.inffus.2013.12.003